If you’ve ever wondered how you can improve your AI applications and see what’s going on under the hood of your LLM’s then you need to have a good LLM analytics and monitoring platform .

At this point Large Language Models (LLMs) have gotten really good at predictive text generation and answering questions based on embedded documents. However in a production environment, you still want to be able to troubleshoot problems and improve the quality of your chatflow responses. As a result, LLM analytics & monitoring can provide real-time insights that can help you in five important ways.

-

Debugging: Large Language Models (LLMs) can be complex, with many interconnected parts, making it challenging to find and fix issues. However with tools like logging and telemetry data that act like breadcrumbs, we can track the source of the problem.

-

Testing: Testing is our way of checking our application to make sure it performs well. LLM testing can be a bit of a rollercoaster though because they’re trained on such large datasets. While you may not necessarily have to test the LLM itself, you can test different components in your Flowise chatflow including memory, custom tools and prompt chains etc.

- Evaluation: Since there’s no one-size-fits-all way to determine LLM quality, its going to be upto you to decide the quality of the responses for your application. With rapidly improving LLMs though, the quality of your embedded data, especially for Conversational Retreival, will determine the quality of your output responses.

-

Tracing: Tracing is a way of following our chatflow’s journey from inital prompt to an output response. Think of it as a way to ensure the LLM’s answers can be traced back to the original documents, so it’s not making things up or hallucinating. In Flowise you can also have your chatflow return its source documents so you can see where it’s getting its information from. This will dramatically help improve the quality of your responses.

-

Usage Metrics: Monitoring how an LLM is used provides valuable insights for enhancing performance and usability. Common usage metrics include the number of requests per second, average usage time, and popular LLM tasks. Comparing metrics across different models assists in selecting the most suitable model for specific scenarios.

How to Install LLM Analytics for Flowise

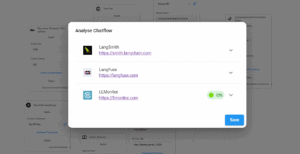

Flowise comes integrated with three analytics platforms: Langfuse, LLM Monitor(Lunary) and Langsmith

At the time of this writing, Langsmith is still invite only, so I was only able to try LangFuse and LLM Monitor, now called Lunary. Both are open source projects so you can self host them on your own server. However I used the cloud versions for both platforms.

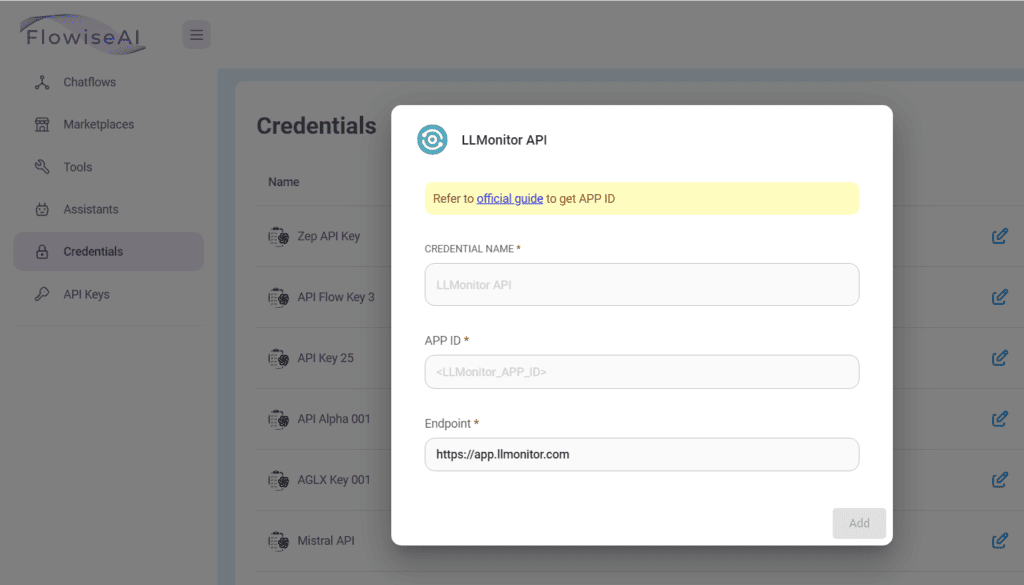

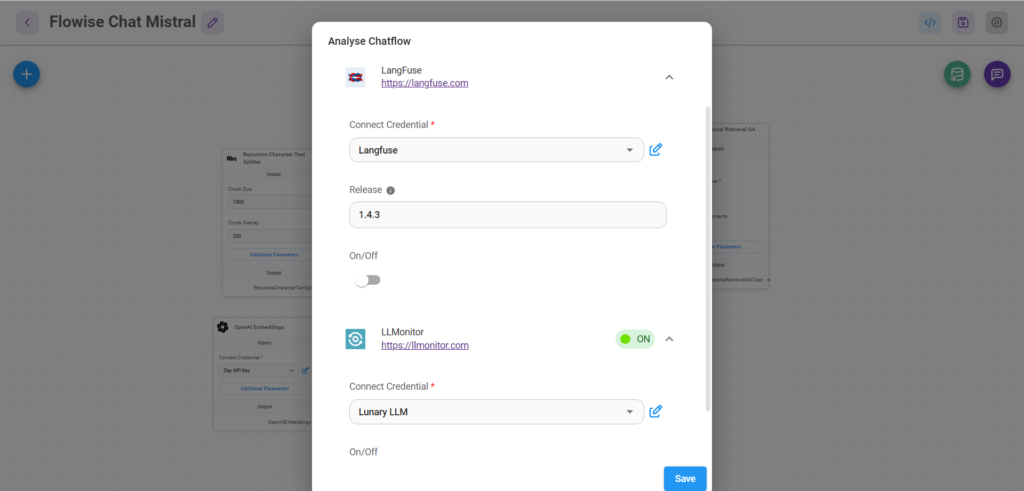

Accessing both tools in Flowise is pretty straightforward. Once you sign up for an account at either Langfuse or Lunary, you have to copy and paste the API keys to Flowise as credentials. After that you can select those credentials when choosing which analytics tool you want to use for your chatflow.

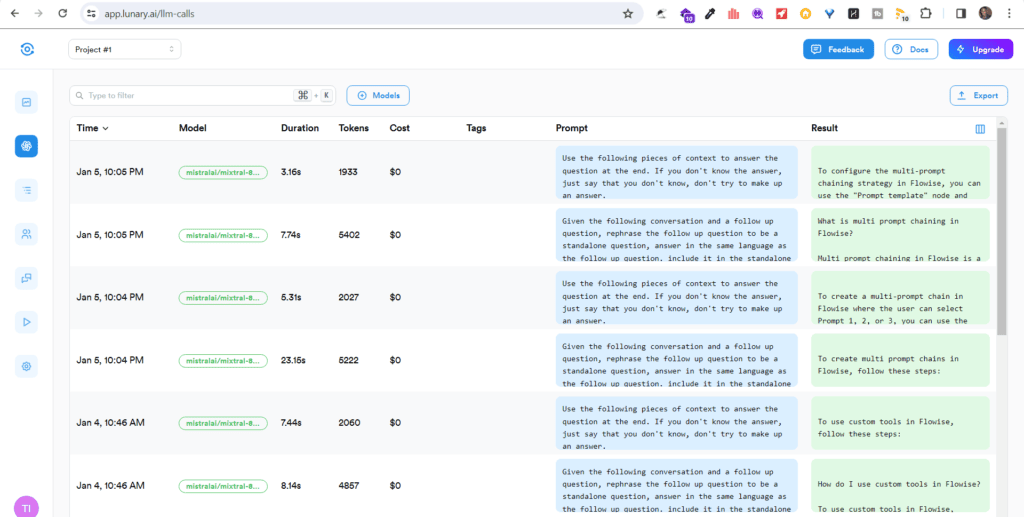

Once we have entered our credentials and activated the platform we want (in this case Lunary), we can start monitoring our prompts and output responses as well as an estimate of the tokens used during a query and response.

As you can see below, we are able track calls to our LLM and see exactly how our prompts are working.

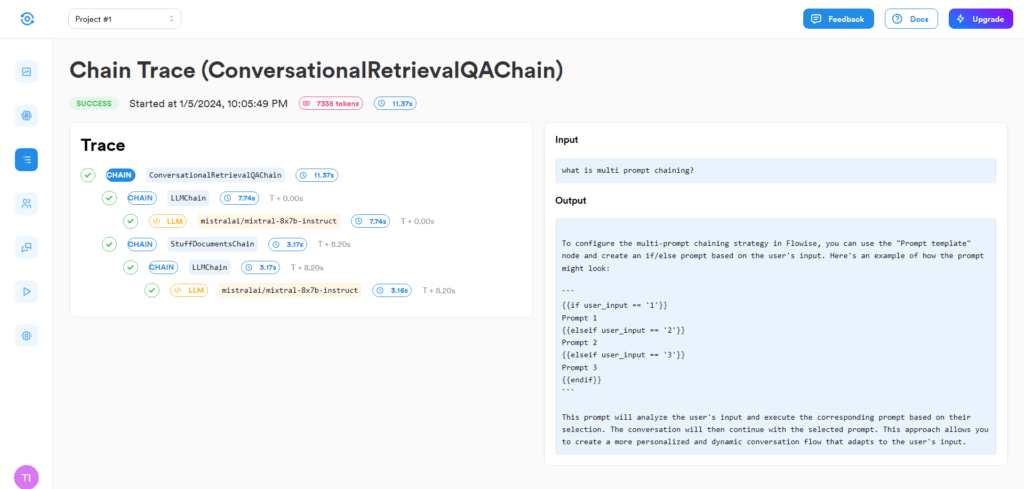

Lunary also provides traces so we see each step of the execution chain including the elapsed time in seconds. This can often help us spot performance issues and reduce latency in our chatflows.

Using an analytics tool also helps you see how your chatflow history works and how previous responses are added to the prompt context window to answer related questions.

Another real time insight is the number of tokens consumed. If your chatflow is using a chat history for example, that can significantly increase your token usage and affect LLM costs.

Both Langfuse and LLM Monitor/Lunary do a great job of show you your traces, although I tended to prefer Lunary’s UI and their ability to estimate my token usage.

Depending on your LLM and Chatflow, you may get different results so you’ll just have to test it for yourself. I’ve found these tools are incredibly useful for sorting out any issues in my chatflow.

Langfuse:

langfuse.com

LLM Monitor(Lunary):

lunary.ai